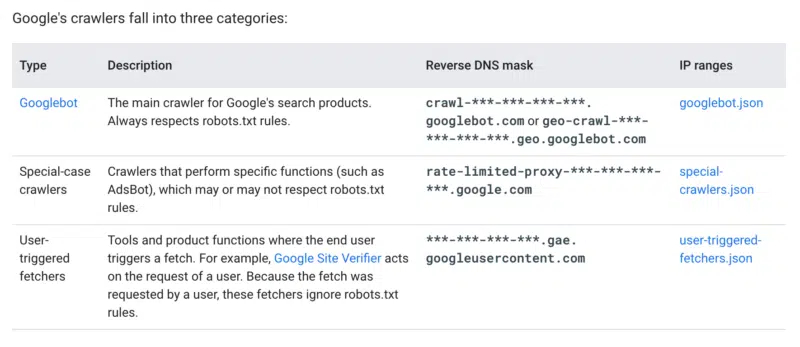

Google has recently released additional information regarding its Google crawlers, categorizing them into three distinct groups: Googlebot, special-case crawlers, and user-triggered fetchers. Each group has its own list of IP addresses, which are included in a JSON formatted file.

The Googlebot is the primary crawler used for Google’s search products and always adheres to robots.txt rules. Special-case crawlers perform specific functions, such as AdsBot, and may or may not comply with robots.txt rules. User-triggered fetchers are tools and functions that are activated by the end-user, like Google Site Verifier, or through Google Search Console based on user actions.

Google has also listed the IP address ranges and reverse DNS mask for each type of crawler.

The Googlebot can be identified through the crawl-–––.googlebot.com or geo-crawl-–––.geo.googlebot.com addresses, while special-case crawlers can be identified through the rate-limited-proxy-–––.google.com address. User-triggered fetchers can be identified through the –––.gae.googleusercontent.com address.

This update allows for better understanding of how Google crawlers operate, when they comply with robots.txt rules, and how to more accurately identify them. By utilizing this information, users can choose to block specific crawlers while not interfering with Google’s main crawler, the Googlebot.

It is believed that Google made this update after receiving feedback regarding the GoogleOther robot they recently announced. This update is beneficial for webmasters who need to identify and distinguish between Google’s various crawlers.

Sharing is Caring!